“You explicitly agree that your Content may be used to train AI” – seventeen words buried in SoundCloud’s updated terms that sent shockwaves through the music world faster than a viral TikTok dance. The February 2024 Terms of Service update went largely unnoticed until tech ethicist Ed Newton-Rex highlighted it on social media last week.

What followed was digital drama in real-time. Artists expressed alarm, SoundCloud issued clarifications, and the music community engaged in heated debate about how platforms should handle creative work in the AI era.

The updated terms state that “in the absence of a separate agreement,” users “explicitly agree that your Content may be used to inform, train, develop or serve as input to artificial intelligence.” This broad language has raised significant questions about consent and creator rights on the platform.

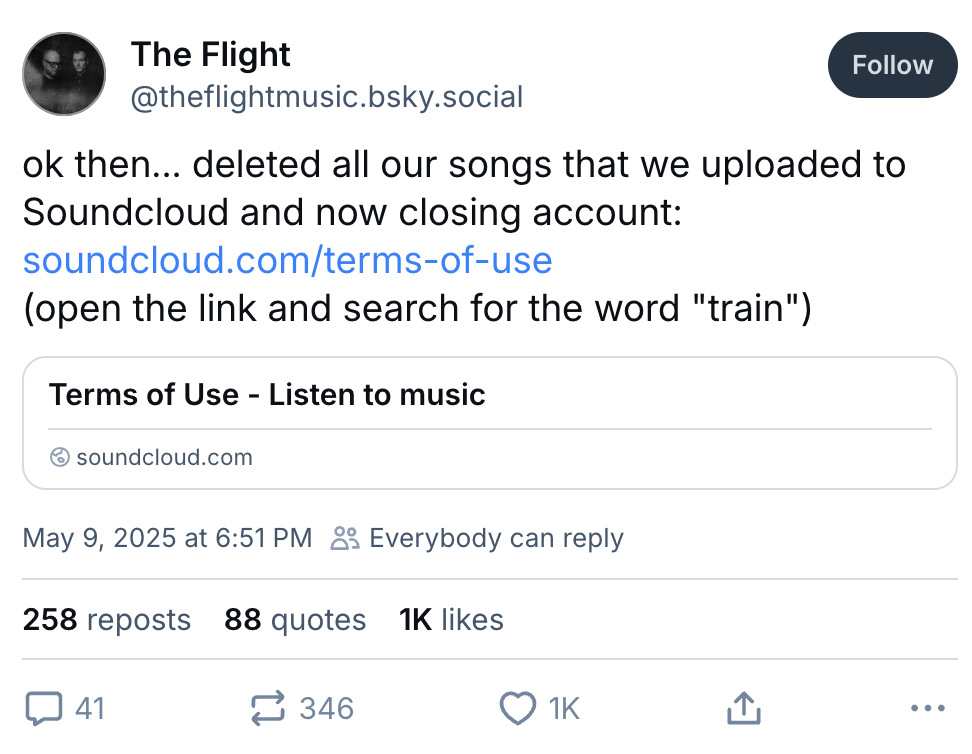

The reaction hit like a bass drop at 3 a.m. The Flight, composers behind soundtracks for “Edge of Tomorrow” and “Alien: Isolation,” deleted their entire catalog from the platform with a terse goodbye: “Ok then… deleted all our songs that we uploaded to SoundCloud and now closing account.” Their departure wasn’t just symbolic – it represented concrete concerns about how their work might be used.

SoundCloud responded with a statement insisting they “have never used artist content to train AI models” despite the language in their terms. The contrast between the terms’ broad permissions and the company’s narrower public statements raised eyebrows throughout the industry.

Their spokesperson claimed the terms update merely clarified how content interacts with AI for “personalized recommendations, content organization, fraud detection, and improvements to content identification with the help of AI Technologies.” This explanation left many artists questioning why such broad permission language was necessary for these limited uses.

The timing of the terms update generated discussion among artists and industry observers. SoundCloud has been exploring AI technologies, including partnerships with various AI music tools. In a press release earlier this year, the company positioned these tools as ways to “democratize music creation for all artists.”

The company’s statement to The Hollywood Reporter noted that their Terms of Service “explicitly prohibits the use of licensed content, such as music from major labels, for training any AI models, including generative AI.” This distinction raised questions about different standards of protection for independent versus signed artists on the platform.

“We understand the concerns raised and remain committed to open dialogue,” a SoundCloud representative stated. The company emphasized that any future application of AI would be “designed to support human artists, enhancing the tools, capabilities, reach and opportunities available to them on our platform.”

“Should we ever consider using user content to train generative AI models, we would introduce clear opt-out mechanisms in advance,” SoundCloud promised in a follow-up statement to The Hollywood Reporter. This statement came in response to growing artist concerns about consent and control.

The stakes in this debate transcend mere principle. AI music generation has attracted significant investment, with companies like Suno recently securing $125 million in funding. These technologies rely on vast amounts of training data – which makes artists increasingly conscious of how their work might be used.

Ed Newton-Rex, founder of ethical AI nonprofit Fairly Trained, noted that SoundCloud’s clarification “doesn’t actually rule out SoundCloud training generative AI models on their users’ music in future.” His observation highlights the gap between current statements and future possibilities allowed by the terms.

The relationship between platforms and creators has shifted like the ground during an earthquake. User content – once the product platforms helped promote – is now potential training data worth billions.

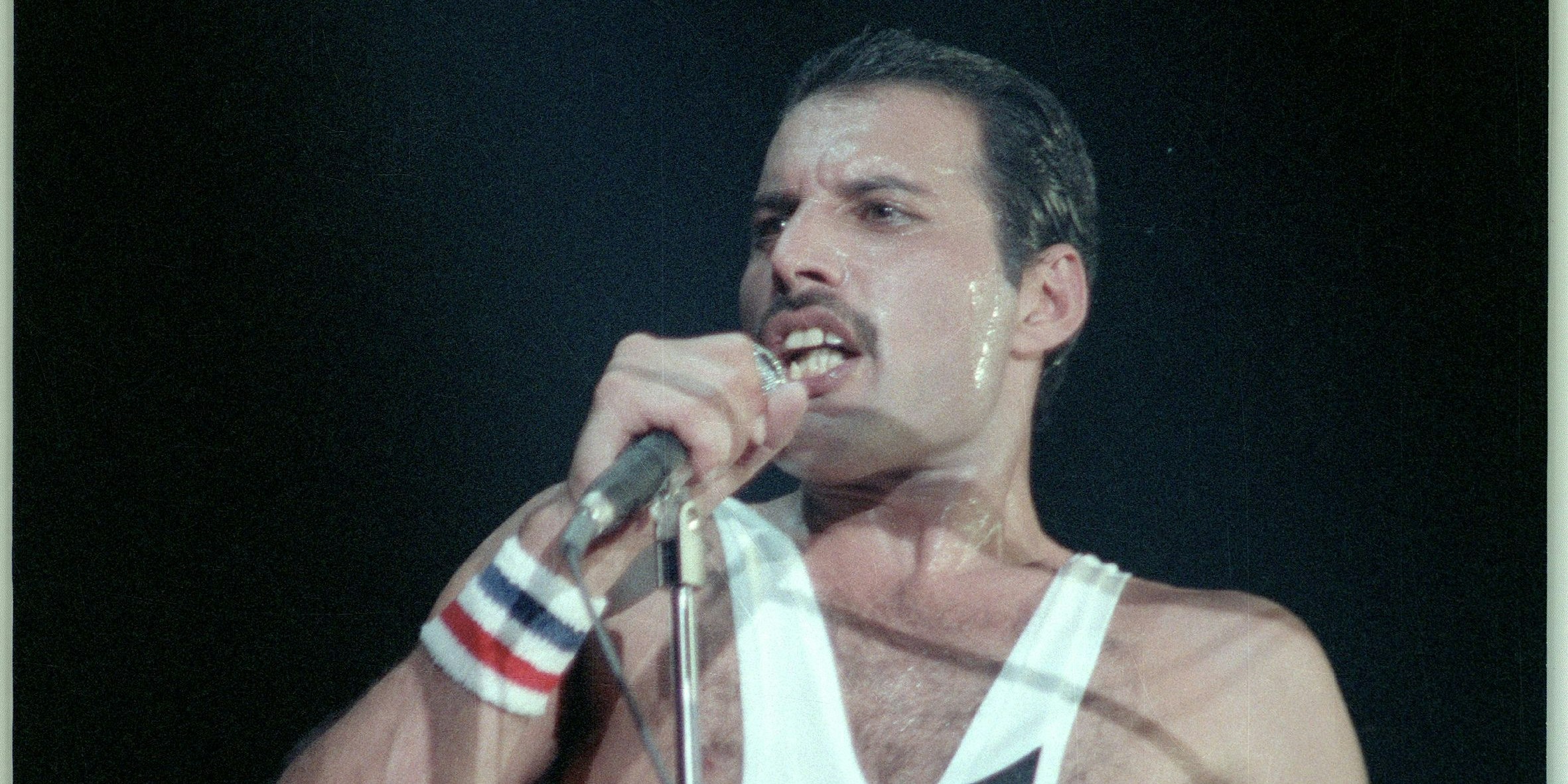

The controversy highlights a broader tension in the creative industries. As platforms integrate AI features, they must balance innovation with respecting creator rights and maintaining trust with their user communities. The reaction to SoundCloud’s terms update demonstrates how sensitive these issues have become – particularly in an industry that has already witnessed decades of contentious copyright battles, from the rip off songs to dozens of other infamous songs that sparked ugly legal disputes over the thin line between inspiration and theft.

“We understand the concerns raised and remain committed to open dialogue,” SoundCloud claims, sounding suspiciously like every corporate response to controversy since the invention of PR. But the message to artists is clear: platforms increasingly view creative work as computational fodder rather than human expression.

Independent artists face a stark choice that feels ripped from a dystopian Netflix series: trust verbal promises that contradict legal terms, or join the exodus to platforms with clearer commitments. Either way, this episode exposes how digital platforms can redefine relationships with creators through fine print – no consent or compensation required.